2.5 MW data centre is kept cool by evaporative cooling

One of the most energy-efficient data centres in Europe uses only 9% of the power input for supporting services — including cooling. Can anyone better a PUE of 1.1?

Data centres produce a lot of heat. That is the inevitable consequence of the huge amount of IT equipment they are built to house. Removing that heat is an expensive business, both in terms of the cost of the hardware and, especially, energy to do the job. In addition, there is a host of support services such as uninterruptible power supplies, switchgear, generators, batteries and lighting which are peripheral to the fundamental task of handling and processing data.

When the total facility power is compared with the power used by the core activity of the data centre (first divided by the second) a figure called power usage efficiency (PUE) emerges. Data-centre specialists regard a PUE of 1.5 or lower as indicating an ‘efficient’ data centre. A PUE of 1.2 is ‘very efficient’, according to the Green Grid, a global consortium of IT companies and professionals seeking to improve energy efficiency in data centres.

So how do you describe a new data centre in Bedford which is designed for a PUE of 1.1?

Trying to interpret a PUE into the relative proportions of power used by the IT equipment itself and its support systems is a little tricky. But a metric favoured in Europe, the DCiE (data centre infrastructure efficiency) gives much better feel. DCiE is the reciprocal of PUE, so a PUE of 1.1 translates to a DCiE of 0.91, indicating that only 9% of total power is used by supporting services, of which only about half is for cooling. That compares with 17% for a PUE of 1.2 and 33% for a PUE of 1.5.

Blue Chip describes its new data centre at Bedford as one of the greenest and most efficient in Europe. One of the keys to its very low PUE is adiabatic cooling with cold-aisle air containment. Other support services are also selected for low energy consumption.

The adiabatic cooling system was designed and implemented by EcoCooling. It is the biggest cooling project for the company to date and expected to reduce the cooling bill by 95% compared with traditional CRAC (computer room air conditioning) units. However, as EcoCooling’s managing director Alan Beresford explains, this approach to cooling a data centre was adopted following a detailed appraisal of operating conditions in the data suites.

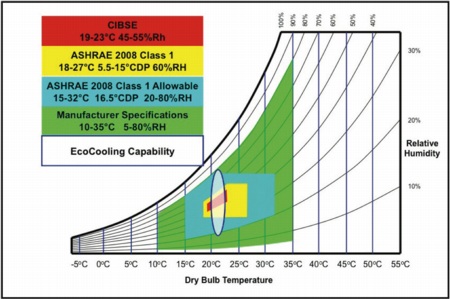

There are several codes for environmental conditions in data centres (Fig. 1).

The most stringent code is from CIBSE, with a design temperature of 21°C ±2 K and a relative humidity 45 to 55%.

Rather more relaxed is an ASHRAE standard, with a temperature range of 18 to 27°C and a dewpoint of 5.5 to 15°C — an RH of 25 to 60%.

ASHRAE also has a more relaxed set of ‘allowable’ environmental conditions, with temperature ranging from 15 to 32°C and a dewpoint of 16.5°C, corresponding to a relative humidity of 20 to 80%.

The IT manufacturers themselves are even less demanding, and tolerant of temperatures from 10 to 35°C and RH from 5 to 80%.

The capabilities of evaporative cooling, according to Alan Beresford, are also indicated in Fig. 1. Temperature is maintained in the low 20s°C and relative humidity within both ASHRAE’s allowable conditions and manufacturers’ tolerances.

So why the huge variation in environmental standards for data centres? Alan Beresford dates the more stringent conditions, especially for relative humidity, back to the days of punched-paper and magnetic tapes, which are simply not seen in data centres today. Also older IT equipment such as main frames were more sensitive to static electricity problems associated with low humidity.

Blue Chip was formed in 1987 by engineering people from IBM. This is the company’s third data centre in the Bedford area, and as engineering people they were prepared to accept manufacturer specifications for environmental conditions.

For the comfort of staff working in the data suites, the cold aisle design temperature is 16°C, resulting in a hot-aisle temperature of around 25°C.

As its 2.5 MW power load indicates, this is a large data centre. It has a total area of 2300 m2, of which over 840 m2 is for the three data suites. The first phase involves installation 192 racks out of a total capacity of 384.

Tempered air is delivered through a 1.2 m-deep raised floor at a constant pressure of 9 Pa and enters the enclosed cold aisles through floor grilles. Each grille can deliver 0.5 m3/s to typically cool 6 kW of load. The total IT load is 1.5 MW, requiring up to 140 m3/s of air.

Air at such a low pressure can be delivered by low-energy axial fans rather than centrifugal fans, which deliver air at much higher pressure but for three times the energy consumption.

When the outside air temperature is lower than 16°C, 100% fresh air can be used, with the only power load being the fans.

At higher temperatures, adiabatic cooling comes into effect — adding pump power to the electrical load and the cost of water. The maximum power required for the fans and pumps is just 4 kW. The variable-speed fans are linked to a SCADA control system. The total design air flow rate is 160 m3/s.

There are six evaporative coolers per fan assembly. Their water consumption is about 1.6 m3/kW/year of cooling load. Water costs about £1 a cubic metre, and about 60% of it is evaporated to provide adiabatic cooling.

Local meteorological data shows that outdoor temperatures exceed 21°C for only 5% of the time (438 h a year), but that they can reach 35°C. For the rare very hot days when the evaporative cooling cannot maintain the desired conditions and to provide security of cooling capability, a completely separate chiller-based system with air-cooled condensers will come into operation. There are two CRAC units in each suite, and they are expected to be required for only three or four days a year.

Both the adiabatic and CRAC systems have N+1 redundancy.

At the start of the project, only 1.5 MW of electricity was available to the site, and the plan was to bring on the site’s standby generators when mechanical cooling was required. Since then, more power has become available.

The evaporative cooling system is expected to achieve an EER of 30 to 40, about 10 times better than a CRAC system. That efficiency is expected to reduce the cooling load by 95%, amounting to a reduction of about £500 000 a year on the electricity bill. Overall, the power requirement of this data centre has been halved.

Support for the project came from the Carbon Trust in the form of an interest-free loan to be repaid over five years. The payback on the extra capital investment for the adiabatic cooling is expected to be only about six months.

Having substantially reduced the energy requirement for its data centre, Blue Chip aims to achieve carbon neutrality by supporting the planting of trees in the nearby Forest of Marston Vale, which occupies an area of 61 square miles between Bedford and Milton Keynes. The landscape has been scarred by decades of clay extraction, brick making and landfill. The restoration of the area has already seen the planting of a million trees, and the plan is to plant five million more by 2030. Blue Chip’s involvement will see the planting of over 750 000 trees, 1987 trees for every rack in the new data centre.

Alan Beresford is confident about the role of evaporative cooling in the future. The company was set up just five years ago. Its first projects were the cooling of UPS (uninterruptible power supply) rooms. UPS batteries need to be kept below 25°C or their life is shortened. EcoCooling was then prompted by customers to consider using evaporative cooling to cool data rooms, leading to the current project — which was embarked upon on the basis of the client seeing only a 30 kW system.

Surely it cannot be long before the Blue Chip installation starts popping up in award schemes.

EcoCooling Ltd is at Symonds Farm Business Park, Newmarket Road, Bury St Edmunds IP28 6RE.